使用Selenium提取人民网搜索数据#

http://search.people.com.cn/cnpeople/news/getNewsResult.jsp

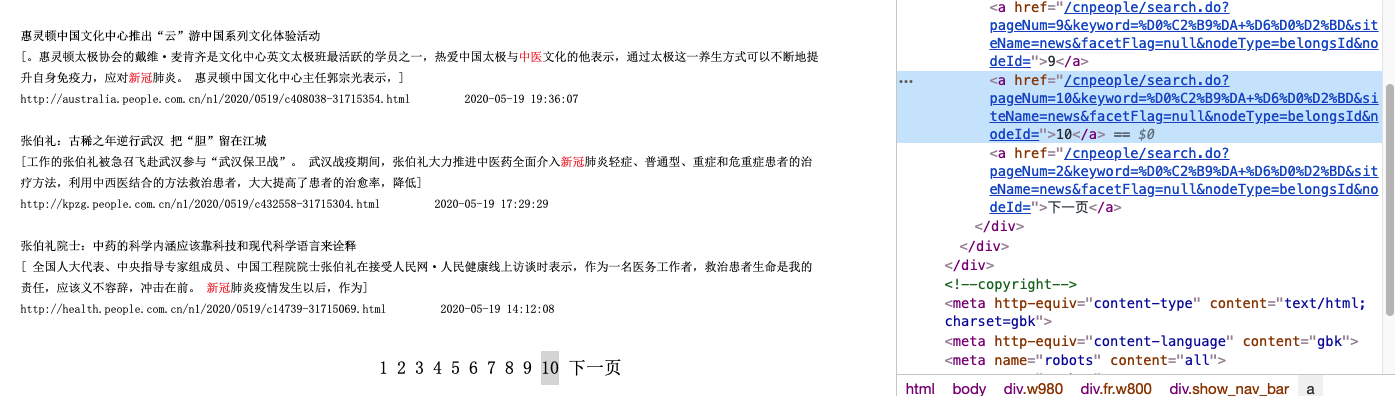

点击下一页页面的URL不变

鼠标右键查看页码

print(*range(1, 3))

1 2

#<a href="/cnpeople/search.do?pageNum=2&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=false&nodeType=belongsId&nodeId=">2</a>

url = 'http://search.people.com.cn/cnpeople/search.do?pageNum='

path = '&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId='

page_num = range(1, 10)

urls = [url+str(i)+path for i in page_num]

for i in urls:

print(i)

http://search.people.com.cn/cnpeople/search.do?pageNum=1&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=2&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=3&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=4&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=5&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=6&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=7&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=8&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=9&keyword=%D0%C2%B9%DA&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

无法通过requests直接获取#

提醒:您的访问可能对网站造成危险,已被云防护安全拦截

import requests

from bs4 import BeautifulSoup

content = requests.get(urls[0])

content.encoding = 'utf-8'

soup = BeautifulSoup(content.text, 'html.parser')

soup

import requests

from bs4 import BeautifulSoup

content = requests.get(urls[0])

content.encoding = 'utf-8'

soup = BeautifulSoup(content.text, 'html.parser')

soup

/opt/anaconda3/lib/python3.7/site-packages/requests/__init__.py:91: RequestsDependencyWarning: urllib3 (1.26.7) or chardet (3.0.4) doesn't match a supported version!

RequestsDependencyWarning)

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<style>

body{ background:#eff1f0; font-family: microsoft yahei; color:#969696; font-size:12px;}

.online-desc-con { text-align:center; }

.r-tip01 { color: #969696; font-size: 16px; display: block; text-align: center; width: 500px; padding: 0 10px; overflow: hidden; text-overflow: ellipsis; margin: 0 auto 15px; }

.r-tip02 { color: #b1b0b0; font-size: 12px; display: block; margin-top: 20px; margin-bottom: 20px; }

.r-tip02 a:visited { text-decoration: underline; color: #0088CC; }

.r-tip02 a:link { text-decoration: underline; color: #0088CC; }

img { border: 0; }

</style>

<script>

void(function fuckie6(){if(location.hash && /MSIE 6/.test(navigator.userAgent) && !/jsl_sec/.test(location.href)){location.href = location.href.split('#')[0] + '&jsl_sec' + location.hash}})();

var error_403 = '';

if(error_403 == '') {

error_403 = '当前访问疑似黑客攻击,已被创宇盾拦截。';

}

</script>

</head>

<body>

<div class="online-desc-con" style="width:550px;padding-top:15px;margin:34px auto;">

<a href="http://www.365cyd.com" id="official_site" target="_blank">

<img alt="" id="wafblock" style="margin: 0 auto 17px auto;"/>

<script type="text/javascript">

var pic = '' || 'hacker';

document.getElementById("wafblock").src = '/cdn-cgi/image/' + pic + '.png';

</script>

</a>

<span class="r-tip01"><script>document.write(error_403);</script></span>

<span class="r-tip02">如果您是网站管理员<a href="http://help.365cyd.com/cyd-error-help.html?code=403" target="_blank">点击这里</a>查看详情</span>

<hr/>

<center>client: 36.154.208.6, server: 38ae086, time: 01/Nov/2021:15:30:10 +0800 [80001]</center>

<img alt="" src="/cdn-cgi/image/logo.png"/>

</div>

</body>

</html>

for i in urls:

print(i)

http://search.people.com.cn/cnpeople/search.do?pageNum=1&keyword=%D0%C2%B9%DA+%D6%D0%D2%BD&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

http://search.people.com.cn/cnpeople/search.do?pageNum=2&keyword=%D0%C2%B9%DA+%D6%D0%D2%BD&siteName=news&facetFlag=null&nodeType=belongsId&nodeId=

from selenium import webdriver

from bs4 import BeautifulSoup

import time

browser = webdriver.Chrome()

dat = []

for k, j in enumerate(urls):

print(k+1)

time.sleep(1)

browser.get(j)

source = browser.page_source

soup = BeautifulSoup(source, 'html.parser')

d = soup.find_all('ul')

while len(d) < 2:

print(k+1, 'null error and retry')

time.sleep(1)

browser.get(j)

source = browser.page_source

soup = BeautifulSoup(source, 'html.parser')

d = soup.find_all('ul')

for i in d[1:]:

urli = i.find('a')['href']

title = i.find('a').text

time_stamp = i.find_all('li')[-1].text.split('\xa0')[-1]

dat.append([k+1, urli, title, time_stamp])

browser.close()

len(dat)

1

2

3

4

5

6

7

8

9

450

from selenium import webdriver

from bs4 import BeautifulSoup

import time

browser = webdriver.Chrome()

dat = []

for k, j in enumerate(urls):

print(k+1)

time.sleep(1)

browser.get(j)

source = browser.page_source

soup = BeautifulSoup(source, 'html.parser')

d = soup.find_all('ul')

while len(d) < 2:

print(k+1, 'null error and retry')

time.sleep(1)

browser.get(j)

source = browser.page_source

soup = BeautifulSoup(source, 'html.parser')

d = soup.find_all('ul')

for i in d[1:]:

urli = i.find('a')['href']

title = i.find('a').text

time_stamp = i.find_all('li')[-1].text.split('\xa0')[-1]

dat.append([k+1, urli, title, time_stamp])

browser.close()

len(dat)

1

2

3

4

5

6

7

8

9

10

11

11 null error and retry

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

1416

import pandas as pd

df = pd.DataFrame(dat, columns = ['pagenum', 'url', 'title', 'time'])

df.head()

| pagenum | url | title | time | |

|---|---|---|---|---|

| 0 | 1 | http://world.people.com.cn/n1/2021/1101/c1002-... | [美国白宫新闻秘书感染新冠病毒] | 2021-11-01 13:17:19 |

| 1 | 1 | http://society.people.com.cn/n1/2021/1101/c100... | [新冠疫苗第三针开打 会有第四、第五针吗] | 2021-11-01 09:09:21 |

| 2 | 1 | http://society.people.com.cn/n1/2021/1101/c100... | [儿童新冠疫苗接种启动] | 2021-11-01 09:04:42 |

| 3 | 1 | http://health.people.com.cn/n1/2021/1101/c1473... | [改变过往新冠认知 积极引导3—11岁人群疫苗“应接尽接”] | 2021-11-01 08:57:45 |

| 4 | 1 | http://usa.people.com.cn/n1/2021/1101/c241376-... | [美国空军大量士兵拒绝接种新冠疫苗] | 2021-11-01 08:47:21 |

len(df)

67

df.to_csv('../data/people_com_search20200606.csv', index = False)

Reading data with Pandas#

with open('./data/people_com_search20200606.csv', 'r') as f:

lines = f.readlines()

len(lines)

1423

import pandas as pd

df2 = pd.read_csv('./data/people_com_search20200606.csv')

df2.head()

len(df2)

1416

for i in df2['url'].tolist()[:10]:

print(i)

http://health.people.com.cn/n1/2020/0606/c14739-31737564.html

http://health.people.com.cn/n1/2020/0606/c14739-31737424.html

http://politics.people.com.cn/n1/2020/0606/c1001-31737282.html

http://opinion.people.com.cn/n1/2020/0606/c1003-31737274.html

http://health.people.com.cn/n1/2020/0605/c14739-31736476.html

http://politics.people.com.cn/n1/2020/0605/c1001-31735966.html

http://world.people.com.cn/n1/2020/0604/c1002-31735815.html

http://www.people.com.cn/n1/2020/0604/c32306-31735734.html

http://cpc.people.com.cn/n1/2020/0604/c419242-31735244.html

http://health.people.com.cn/n1/2020/0604/c14739-31734772.html